Roboticists often copy nature, crafting humanoid robots for household chores, worm-style machines for crawling through tunnels and four-legged contraptions that look like cheetahs for running and leaping. But they usually design an animal-like robot body first and then train an AI to control it. In living creatures, though, the body and brain evolve together to tackle complex tasks. So some researchers are borrowing a page from nature’s playbook to design intelligent, adaptive robots.

In the latest example, evolutionary roboticists at the Massachusetts Institute of Technology have created a virtual environment where algorithms can design and improve both a soft robot’s physical form and its controller so they evolve concurrently. Within this digital space, called Evolution Gym, algorithms can develop robots for more than 30 different tasks, including carrying and pushing blocks, doing backflips, scaling barriers and climbing up shafts. When the M.I.T. researchers used their own algorithms in the program, for every single assignment, the software developed a more effective robot than a human did.

“The future goal is to take any task and say, ‘Design me an optimal robot to complete this task,’” says Jagdeep Bhatia, an undergraduate student at M.I.T.’s Computer Science and Artificial Intelligence Laboratory, who led the work. He presented the research at the Conference on Neural Information Processing Systems on December 9.

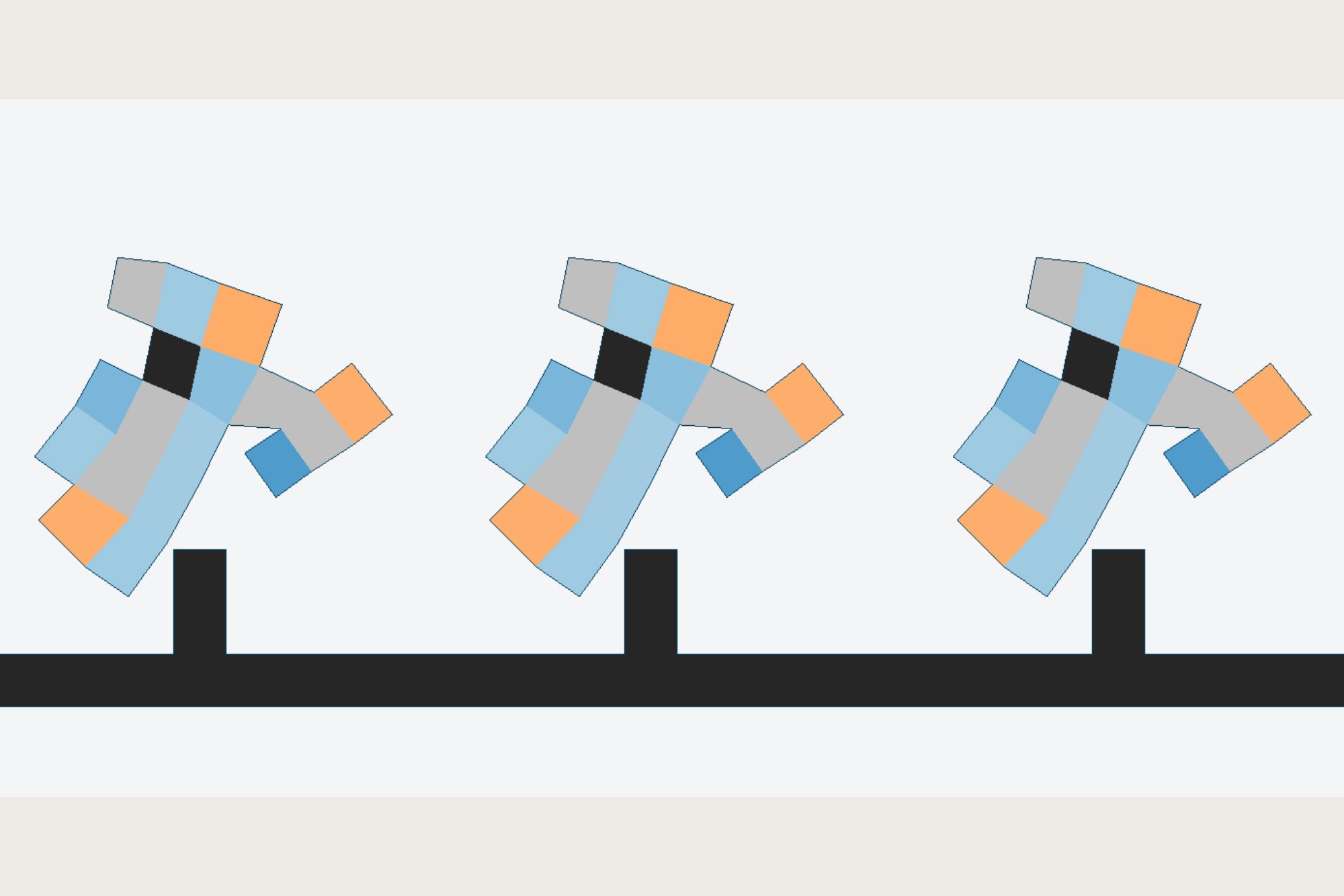

The Evolution Gym relies on two algorithms that bounce results back and forth. First, a design-optimization algorithm “generates a bunch of random robot designs,” Bhatia says. The algorithm creates each soft robot by combining up to 100 individual building blocks, which can be rigid or flexible and can move vertically or horizontally. Then these patchwork designs go to the control-optimization algorithm, which generates a “brain” for each robot that will enable it to perform a given task. This controller computes when and how much each block should be activated—for example, how far and how frequently a horizontal-moving block actuates—so that they all work together to move the robot as needed. Next, the various bot designs try an assigned task in the Evolution Gym while the control-optimization algorithm measures how well they perform and returns the scores to the design algorithm.

Enter evolutionary principles. The design algorithm throws out unfit configurations, “keeps the most fit designs, mutates them a little bit and sees if they perform even better,” Bhatia says. This goes on, with robots passing from the design algorithm to the controller algorithm to testing in the Evolution Gym environment and back again to the design algorithm until the system converges on the highest score. The process results in the best combination of design and control—or body and brain—to do the task.

Often, the process leads to familiar shapes. For the climbing task, the winning design evolved two arms and two legs that help it shinny up a shaft like an ape. The best carrying bot looks like a mix between a puppy and a squishy shopping cart. But in most cases, the results are unexpected. Instead of resembling a real animal or a device a human would design, they look like something a toddler might have built with blocks.

Bhatia’s favorite resulted from a task in which the robot had to slip under randomly spaced tiles, then drag an object across the top of those tiles while still underneath them. For this job, the simulator designed a bot that unfolded itself once under the tiles and then slowly wiggled along to push the object above. It is a perfect example of the body and brain working together to act intelligently.

That is the beauty of evolutionary robotics, says Josh Bongard of the University of Vermont, who was not involved in the work. Copying robot body plans from nature often does not work, he says, “because dogs and humans evolved to fill very different environmental niches from those we try to introduce our humanoid or canine bots into.” Aviation is a good example, Bongard explains. “Early pioneers tried to make machines with flapping wings, but those prototypes failed,” he adds. “Only when we built non-bird-like machines did we get them to fly.” Similarly, robot bodies produced by evolutionary algorithms often look strange but seem to work well at given tasks.

Others have attempted to co-design virtual robot bodies and brains, Bhatia says, but they have focused on simple tasks such as walking and jumping. “One of the strongest points of our work is the number of tasks and number of unique tasks we developed,” he says.

Evolution Gym is open-source: Bhatia’s team created it to provide a benchmark platform where any researcher can design and test their own algorithms and compare approaches. In previous work, groups have typically developed their own virtual environments for such assessments. The new digital space gives researchers a common baseline to measure how well various algorithms stack up. “That allows people to measure progress—and that’s really important,” says Agrim Gupta, a computer science Ph.D. student, who conducts similar research at Stanford University. He recently published a paper on how intelligence not only can be attained through evolution but can also be developed from experience. Bongard concurs, saying that the new M.I.T. simulator will allow the field of evolutionary robotics “to move forward faster by clarifying which ways of evolving robots work better than others.”

Such assessments are necessary because the robots designed by evolutionary algorithms do not always work. The M.I.T. algorithms, for instance, could not successfully design robots for catching and lifting. This shows there is a lot more work to do in designing truly intelligent robots, Bhatia says, making a standard platform such as Evolution Gym even more important to collectively advance the development of robot design. As he puts it, “We are enabling the development of more intelligent AI algorithms to be able to create real-life smart robots in the future.”